Neuromorphic Chips: Bridging the Gap Between Silicon and Synapses

In the relentless pursuit of faster, more efficient computing, a groundbreaking technology is emerging that could revolutionize the way we process information. Neuromorphic chips, inspired by the human brain's neural networks, are poised to transform everything from smartphones to supercomputers. But what exactly are these brain-like processors, and how might they reshape our digital landscape?

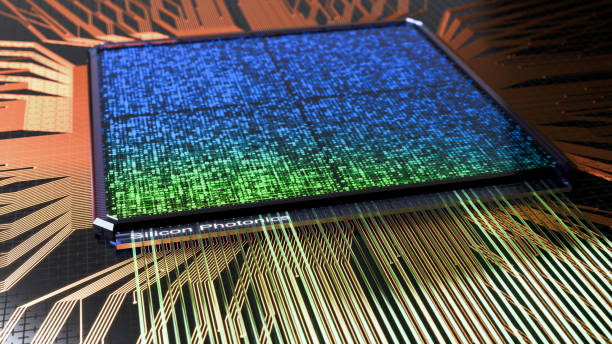

Unlike traditional von Neumann architecture, which separates memory and processing, neuromorphic chips integrate both functions, mirroring the brain’s synapses and neurons. This approach allows for parallel processing, potentially enabling these chips to perform complex tasks with remarkable speed and energy efficiency.

From silicon to synapses: How neuromorphic chips work

At their core, neuromorphic chips employ artificial neurons and synapses created from specialized materials and circuit designs. These components can change their electrical properties based on the information they process, much like biological neurons strengthen or weaken their connections over time.

One key technology enabling this functionality is memristors – circuit elements that can remember the amount of charge that has flowed through them. By combining memristors with traditional CMOS technology, researchers have created chips that can learn and adapt in real-time, opening up new possibilities for machine learning and AI applications.

The promises of brain-like processing

The potential advantages of neuromorphic computing are staggering. These chips could dramatically reduce power consumption while increasing processing speed for certain tasks. For instance, image and speech recognition – operations that typically require significant computational resources – could be performed with a fraction of the energy used by current systems.

Moreover, neuromorphic chips excel at tasks that involve pattern recognition and decision-making under uncertainty – areas where traditional computers often struggle. This makes them ideal for applications ranging from autonomous vehicles to advanced robotics and even space exploration.

Current developments and future prospects

Several major tech companies and research institutions are investing heavily in neuromorphic technology. IBM’s TrueNorth chip, Intel’s Loihi, and BrainScaleS, a European initiative, are just a few examples of ongoing projects pushing the boundaries of brain-inspired computing.

These chips are still in their early stages, with most current applications focused on research and development. However, as the technology matures, we could see neuromorphic processors integrated into consumer devices, enhancing everything from smartphone cameras to smart home systems.

Challenges and limitations

Despite their promise, neuromorphic chips face several hurdles before widespread adoption. One major challenge is scalability – while current chips can simulate thousands or even millions of neurons, they’re still far from matching the complexity of the human brain, which contains roughly 86 billion neurons.

Another issue is software compatibility. Most existing programs are designed for traditional computing architectures, meaning new programming paradigms may be necessary to fully leverage neuromorphic hardware.

The road ahead

As neuromorphic technology continues to evolve, it’s likely to complement rather than replace traditional computing in the near term. We might see hybrid systems that combine neuromorphic chips with conventional processors, each handling tasks they’re best suited for.

The potential impact of this technology extends far beyond faster gadgets. Neuromorphic computing could enable breakthroughs in fields like healthcare, where real-time analysis of complex biological data could lead to more accurate diagnoses and personalized treatments.

In conclusion, neuromorphic chips represent a fascinating convergence of neuroscience and computer engineering. While challenges remain, the promise of more efficient, adaptable, and powerful computing is driving rapid progress in this field. As we stand on the brink of this new era in computing, one thing is clear: the future of technology may look a lot more like the inside of our heads.